10+ openai gpt3 github

Reddit discussion of this post 404 upvotes 214 comments. Recent work has demonstrated substantial gains on many NLP tasks and benchmarks by pre-training on a large corpus of text followed by fine-tuning on a specific task.

Create Ai Content Generator With Python Flask Openai Gpt 3 Youtube

The GPT-2 wasnt a particularly novel.

. September 1 2020. Few lines to build advanced OpenAI model. Zentrale Geldgeber der Organisation sind der Investor und Unternehmer Elon Musk sowie das Unternehmen MicrosoftDas Ziel von OpenAI ist künstliche Intelligenz auf Open.

In November OpenAI finally released its largest 15B. Streaming allows you to get results are they are generated which can help your application feel more responsive. OpenAI is an AI research and deployment company.

Preview Environment in Power Platform. Generative Pre-trained Transformer 3 GPT-3. OpenAIs model is better at taking wildly differing concepts and figuring out creative ways to glue them together even if the end result isnt perfect.

多年以前我曾看到 GitHub 开源项目作者全栈工程师 TJ Holowaychunk 说过这么一句话 I dont read books never went to school I just read other peoples code and always wonder how things work. This repository is a list of machine learning libraries written in Rust. OpenAI LP ist ein Unternehmen das sich kontrolliert durch die Non-Profit-Organisation OpenAI Inc mit der Erforschung von künstlicher Intelligenz KI englisch Artificial Intelligence AI beschäftigt.

Its a compilation of GitHub repositories blogs books movies discussions papers etc. To get started with the GPT-3 you need following things. GPT-3 has 175 billion parameters and would require 355 years and 4600000 to train - even with the lowest priced GPU cloud on the.

However If you are new to OpenAI API follow the intro tutorial for in-depth details. If Stable Diffusion wants to use a very large text encoder they need to train it themselves. Machine Learnings Most Useful Multitool.

This makes me think back to the controversy over github copilot. It takes less than 100 examples to start seeing the benefits of fine-tuning GPT-3 and performance continues to improve as you add more data. This announcement came in Microsoft Build in May 2021.

004613 Help yourself to the show notes. Initially OpenAI decided to release only a smaller version of GPT-2 with 117M parameters. Hugging FaceTransformers.

This article covered all the steps to fine-tune GPT3 assuming no previous knowledge. GPT-J is the open-source alternative to OpenAIs GPT-3. 004435 DALL-E is a transformer.

GPT-3 is built by Elon Musks OpenAI an independent AI research development company but runs on Microsoft Azure and powered by Azure Machine Learning. GPT-3 from OpenAI has captured public attention unlike any other AI model in the 21st century. The training method is.

Stylized GPT3 is an autoregressive language model that uses deep learning to produce human-like text. By Chuan Li PhD. GPT -3 란.

OpenAI recently published GPT-3 the largest language model ever trained. Otherwise im sure Microsoft wont mind my new gamemaker AI that i trained on that new halo game last year or this OS AI that I trained on windows 11. These papers will give you a broad overview of AI research advancements this year.

OpenAI在最近 新提出的 GPT-3 在网络媒体上引起啦的热议因为它的参数量要比 2 月份刚刚推出的全球最大深度学习模型 Turing NLP 大上十倍而且不仅可以更好地答题翻译写文章还带有一些数学计算的能力. Today I will show you code generation using GPT3 and Python. It returns a CompletionResult which is mostly metadata so use its ToString method to get the text if all you want is the completion.

The architecture is a standard transformer network with a few engineering tweaks with the unprecedented size of 2048-token-long context and 175 billion parameters requiring 800 GB of storage. You can create your CompletionRequest ahead of time or use one of the helper overloads for convenience. OpenAI is an AI research and deployment company.

The OpenAI GPT-2 exhibited impressive ability of writing coherent and passionate essays that exceed what we anticipated current language models are able to produce. Check out our new post GPT 3. Twitter youtube github soundcloud linkedin facebook.

A Semi-Deep Dive. This AI Generates Code Websites Songs More From Words. 지금까지 현존하는 AI모델 중 가장 인공 일반 지능General Int.

Our mission is to ensure that artificial general intelligence benefits all of humanity. How to generate Python SQL JS CSS code using GPT-3 and Python Tutorial. Massive language models like GPT3 are starting to surprise us with their abilities.

And follow the instructions on their Github repo1 and and you should be good to go. The tech world is abuzz with GPT3 hype. To help you catch up on essential reading weve summarized 10 important machine learning research papers from 2020.

The model is trained on the Pile is available for use with Mesh Transformer JAXNow thanks to Eleuther AI anyone can download and use a 6B parameter version of GPT-3. 原始数据集文件可能已更新在这种情况下应当更新 TFDS 数据集构建器请打开一个新的 Github 议题或拉取请求 使用 tfds build --register_checksums 注册新的校验和. GPT -3란 open AI 사에서 개발한 인공지능 모델로 무려 3000억 개의 데이터 셋으로 학습을 하였으며 1750개의 매개변수를 가지고 있다.

A Hitchhikers Guide UPDATE 1. The following post will focus on GPT3 model performance tracking and manipulating the hyper-parameters. Twitter GitHub Instagram LinkedIn YouTube.

Build a Machine Learning Model in 10 Minutes. The sheer flexibility of the model in performing a series of generalized tasks with near-human. 003819 GPT-3 and GitHub Copilot.

If these AIs are going to be trained on other peoples IP then somebody needs to be held accountable when they commit plagiarism. The decision not to release larger models was taken due to concerns about large language models being used to generate deceptive biased or abusive language at scale. You basically just need to clone the repo set up a conda environment and make the weights available.

Our mission is to ensure that artificial general intelligence benefits all of humanity. OpenAIs new image-generating-model does more than just paint pictures December 9 2020. Jodie continues to share multiple resources to help you continue exploring modeling and NLP with Python.

OpenAI researchers demonstrated how deep reinforcement learning techniques can achieve superhuman performance in Dota 2. In research published last June we showed how fine-tuning with less than 100 examples can improve GPT-3s performance on certain tasksWeve also found that each doubling of the number of examples tends to improve. - GitHub - vaaaaanquishAwe.

OpenAI never publicly released their huge sized CLIP model which they used for Dall-E 2 they only released smaller models like the one currently being used. We also discuss the two major transformer models BERT and GPT3. While typically task-agnostic in architecture this method still requires task-specific fine-tuning datasets of thousands or tens of thousands of examples.

By contrast humans can generally.

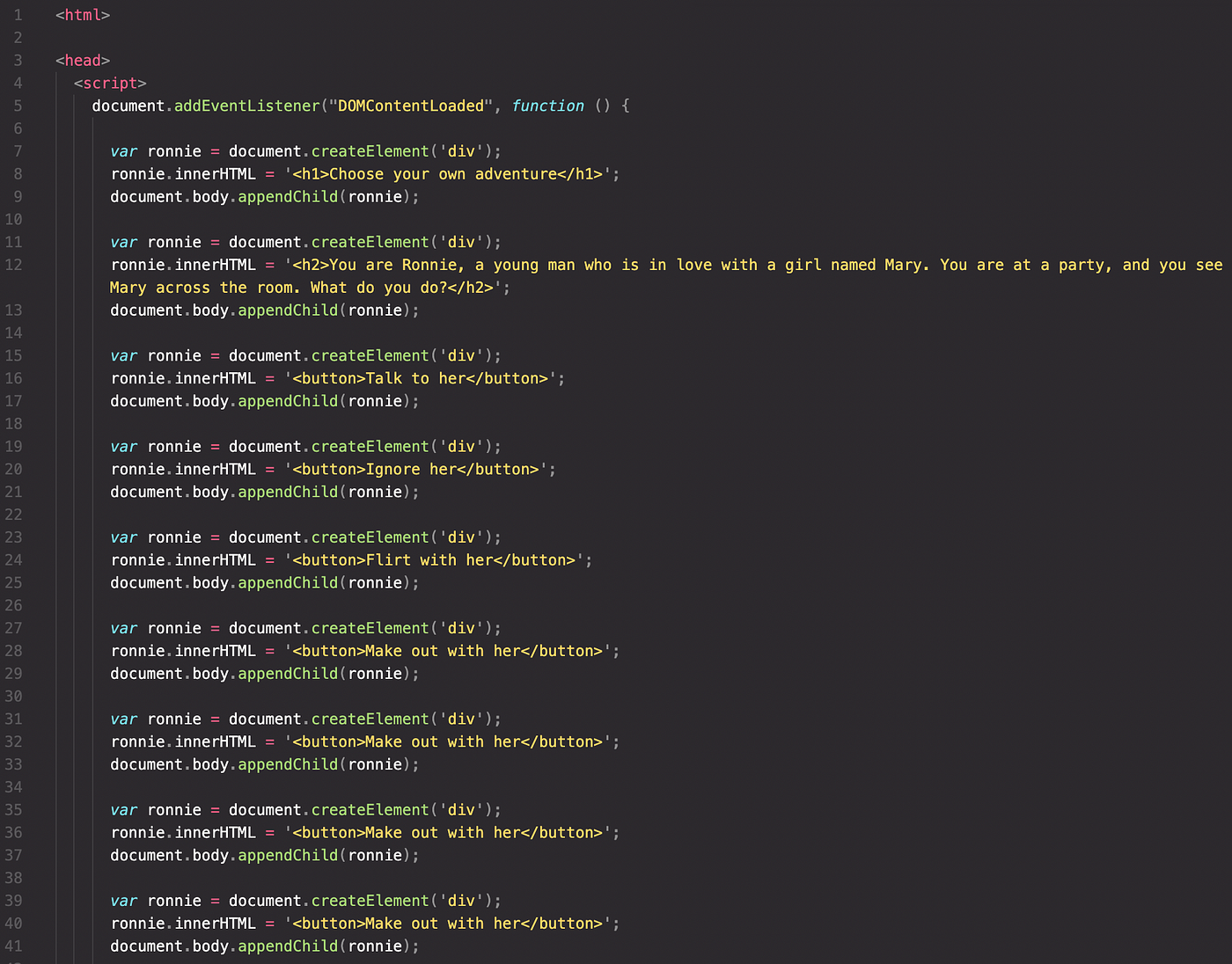

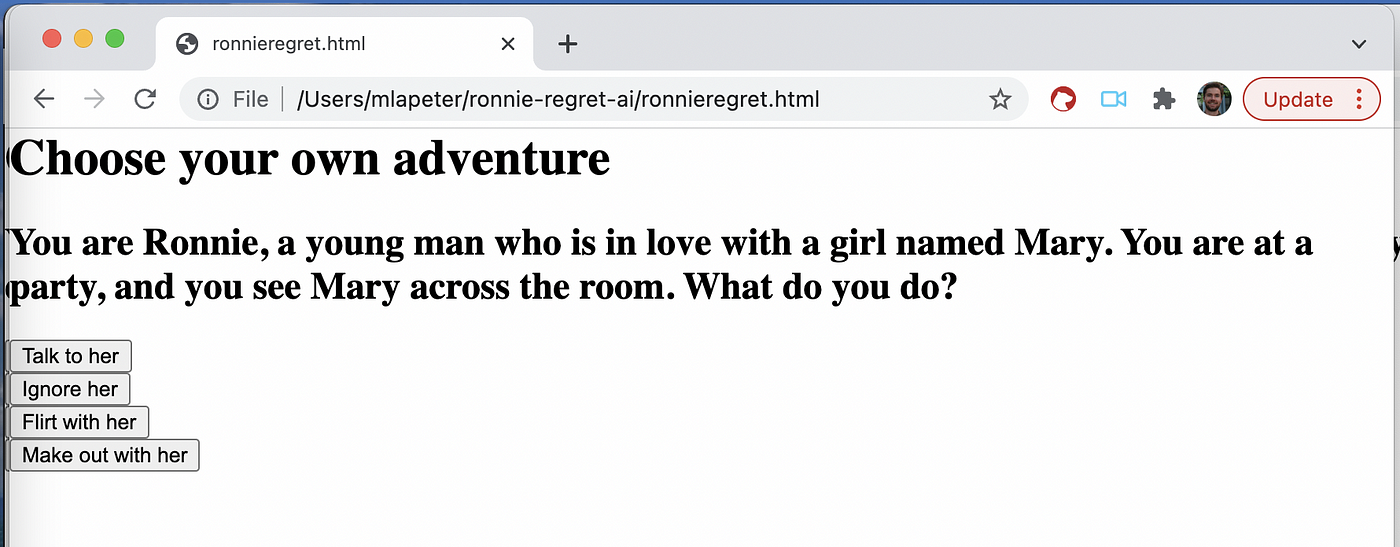

Openai Codex You Must Ask The Right Questions By Mike Lapeter Medium

Create Ai Content Generator With Python Flask Openai Gpt 3 Youtube

Bookmarks Nlp Md At Master Hyunjun Bookmarks Github

8 Awesome Ai Tools That Can Generate Code To Help Programmers Kwebby

Create Ai Content Generator With Python Flask Openai Gpt 3 Youtube

Openai Releases Glide A Scaled Down Text To Image Model That Rivals Dall E Performance Synced

Openai Codex You Must Ask The Right Questions By Mike Lapeter Medium

Openai Releases Glide A Scaled Down Text To Image Model That Rivals Dall E Performance Synced

Pin On Everything Analytics

What Went Wrong With Openai S Gpt 3 Pricing Model R Gpt3

Openai Codex You Must Ask The Right Questions By Mike Lapeter Medium

Openai Codex You Must Ask The Right Questions By Mike Lapeter Medium

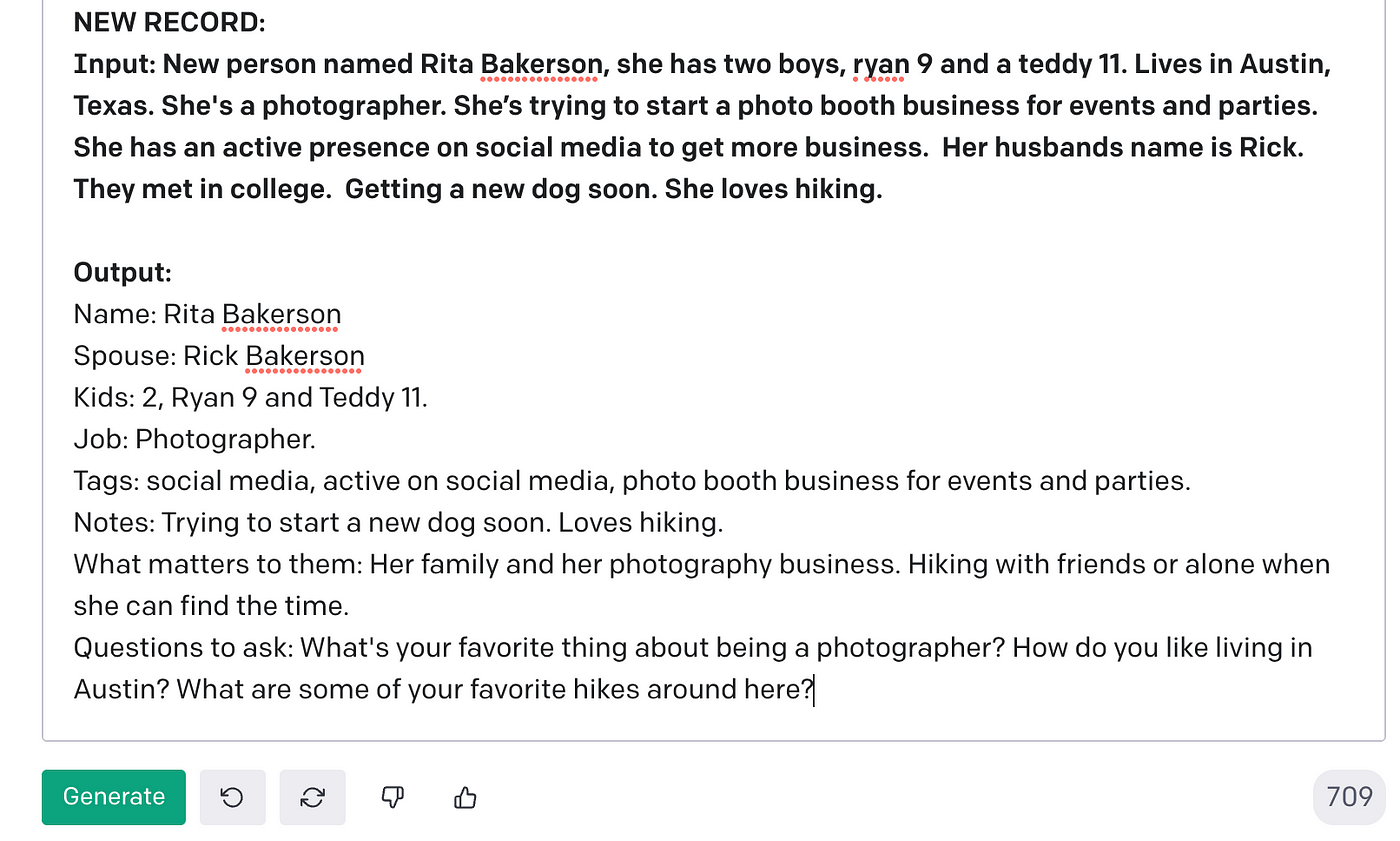

Using Gpt 3 As A Personal Crm For People With Terrible Memories By Mike Lapeter Medium

Kalyan Ks Kalyan Kpl Twitter

Openai Codex You Must Ask The Right Questions By Mike Lapeter Medium

Create Ai Content Generator With Python Flask Openai Gpt 3 Youtube

Work On Ai S Most Exciting Frontier No Phd Required